Tutorial big data analysis: Weather changes in the Carpathian-Basin from 1900 to 2014 – Part 1/9

Analysis fundamentals

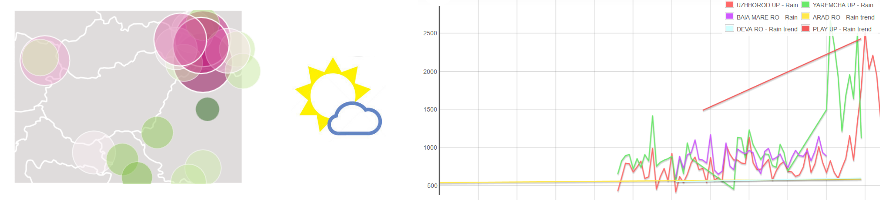

This experiment analyses the weather changes in the Carpathian Basin from 1900 to 2014 using a dataset of daily measurements of weather stations nearby our point of experiment.

High emphasis is put on interactive visualization, as it is inevitable to make the comprehension of information easy.

Big data analytics is gaining a lot of attention. This tutorial is to provide an overview on some open-source tools capable to support distributed analysis on huge datasets.

Nonetheless it analysis the weather trends of the Carpathian Basin of Central Europe and provides an easy to use visualization on the changes.

Steps of a big data experiment

- Decide what you would like to measure, define your interests

- Do a background research, check sources

- Gather the data

- Decide what information interests you, plan to convert raw data to information

- Measure, analyze

- Evaluate, validate, conclude

- Visualize, communicate

?1.????? Decide what you would like to measure, define your interests

Weather data

- Available for public download

- Gaining a lot attention of current weather changes and extremes

- Interests me 🙂

2.????? Do a background research, check sources

Check what is available:

- Data sources

- Former measurement and experiment data, quality of the data and presentation

- Analysis tools supporting the experiment ? proper toolset can spare a lot of time

3.????? Gather the data

Publicly available and downloadable historical weather data from NOAA ? National Climatic Data Center.

4.????? Decide what information interests you, plan to convert raw data to information

Changes of weather, temperature, rainfall, snow levels in my near neighborhood during the last decade and the beginning of the 21st century.

Information synthetized must be compatible with the visualization framework too.

5.????? Measure, analyze

I have chosen the more and more popular Apache Hadoop and its high-level query language, Pig for a parallel map-reduce based analysis (multiple nodes and threads for handling vast amount of information).

Hadoop is an open-source distributed data analysis tool, which uses a so-called map-reduce method to speed up parallel data analytics on a cluster of machines.

6.????? Evaluate, validate, conclude

Checking if the output is valid ? as weather analysis results are available widely, I?ve checked statistics of other providers: if the results concur, the experiment is valid.

Trickier is if you don?t have references, in that case, grabbing out a smaller dataset out of a bigger one and analyzing it by hand ? with another approach and toolset – can help to validate the method you?ve used for the analysis.

7.????? Visualize, communicate

You have the information you wanted but without the colors and flavors, it is worth nothing ? even worse if nobody can get aware of its existence.

So do not underestimate this part, create an attracting, search engine friendly report or a scientific publication that is easy to comprehend to gain some attention for your work efforts.

I?ve used some interactive web-based charts as HTML5 provides a portable and SEO friendly format for data visualization.